Automated tests are important to ensure the quality of your application. In the literature, you'll find many kinds of automated tests such as unit tests, integration tests, functional tests, smoke tests, and so on. I don't like this naming because some tests don't fit in a category and people give different meanings to them making it.

Tests are about trade-offs:

- Confidence: Does the test ensure the application is working as expected

- Duration: How long the test takes to run

- Reliability: Is the test reliable? Does it fail randomly?

- Effort to write: Is the test scenario easy to implement?

- Maintenance cost: How much time do you need to update the test when the code changes?

My way of writing tests has evolved significantly over time. My tests are now far more effective, and their number has substantially decreased.

Initially, I wrote tests for almost every individual public method in all classes of a library. I mocked all external dependencies, ensuring that each test validated only the behavior within the tested method. I sometimes exposed internal classes to the test library to ensure comprehensive testing. This resulted in numerous tests, with each unit of functionality being rigorously tested.

I've now adopted a new strategy that proved to be highly effective on the application I developed. My current practices include:

- Testing mainly the public API, as the internals are implicitly tested and included in code coverage metrics. Note that the public API may not always be classes or methods, but the entry point of the application or HTTP endpoints.

- Minimizing the use of mocks, reserving them for special cases like clients interfacing with external services

- Use strict static analysis, so you can avoid writing tests for stuff that can be detected at compilation time

- Write more assertions in the code (e.g.

Debug.Assert)

This methodology offers several advantages:

- Significantly fewer tests to maintain

- User scenarios are clearly identified and tested

- No need to rewrite tests during code refactoring or changes in implementation as long as the behavior is unchanged.

- The ability to refactor code safely while ensuring unchanged behavior.

- Assistance in identifying obsolete code segments.

#Test the public API

The public API is how your application is exposed to the users. The public API can be different based on the type of projects you are building. Here are some examples:

- Library: The public API is the classes and methods exposed to the users of the library

- Web API: The public API is the HTTP endpoint

- CLI application: The public API is the command-line arguments

- A NuGet package such as my Coding Standard: The public API is the package

Why should you test the public API?

- You ensure the application is working as expected as you are testing the application as a whole, the same way your users will use it

- You are not coupled with the implementation details, so you can refactor the code without updating the tests as long as the behavior doesn't change

- You will write fewer tests than when testing many individual classes or methods

- You increase the code coverage as you test more parts of the application

- You can debug your application more easily as you can reproduce full scenarios

For instance, if you write an ASP.NET Core application, you should not test a Controller directly. Instead, use a WebApplicationFactory to configure and start the server, then send a request to the server and assert the response. This way, you test the application as a whole, including the routing, model binding, filters, middlewares, Dependency Injection, etc. Also, you can refactor the application to handle requests using Minimal API, a custom middleware, or a static file without updating the tests.

I'm not saying you should not test the implementation details. You should sometimes test the implementation details, but this should be limited to a few test cases. This can be useful when you want to validate a specific behavior or when you have a complex method that is hard to test.

Testing the public API doesn't mean you can only do black-box testing. You can use gray-box testing and replace some dependencies. For instance, you may want to test how your application behaves when a third-party API returns an error. In this case, you can mock the API to return the error, while still testing the rest of the application.

But you might wonder, doesn't the term "unit test" imply that you should test every single unit of implementation? Well, not exactly. Kent Beck, the author of Test Driven Development: By Example, acknowledged that the name "unit test" was perhaps not the best choice. In this context, the "unit" refers to a specific behavior, which could involve interactions with multiple units of implementation. Some people even define a "unit" as the entire module or library, and that perspective is valid as well. Some people use the terms "isolated" and "Sociable" tests to differentiate between tests that use mocks and tests that don't.

#Write code to help you write tests

Writing a test should be simple and the test code should be easy to understand. Also, your tests should be coupled to the behavior of the application, not the implementation details. That's why I try to create a test infrastructure that simplifies the test writing process by creating an abstraction. I often use a MyAppTestContext class that contains the common code to write tests. For instance, it can contain the code to start the application, send a request, assert the response, register a mock, redirect logs to the ITestOutputHelper, etc. You can see this class as an SDK for the application under test. This way, you can focus on writing the test scenario and not the boilerplate code.

Here is an example of a MyAppTestContext class usage:

C#

// Arrange

using var testContext = new MyAppTestContext(testOutputHelper);

testContext.ReplaceService<IService, MockImplementation>();

testContext.SetConfigurationFile("""{ "path: "/tmp" }""");

testContext.SeedDatabase(db => db.Users.Add(new User { Username = "meziantou" }));

var user = testContext.SetCurrentUser("meziantou");

// Act

var response = await testContext.Get("/me");

// Assert

InlineSnapshot.Validate(response, """

Id: 1

UserName: meziantou

""");

#Do you need to mock dependencies?

Mocks are a way to test your application without using real dependencies. So, each time you use a mock, you are not testing the production application, but another flavor of the application. The idea is to keep this flavor as close as possible to the production use case. But this is not an easy task. Also, it can be hard to maintain tests using mocks. The services you are mocking can change its behavior and you may not detect it as you are not using it directly. Also, the tests can become harder to write as you need to write lots of code to set up your tests.

First, do you really need mocks? Developers tend to use too many mocks. For instance, do you need to mock the file system? The file system is not trivial to mock and many people have wrong assumptions about it. For instance, the file system is case-sensitive on Windows. Yes, you read it right, you can create multiple files that only differ by the casing! Also, some file names are invalid on Windows (e.g. COM0, PRN, LPT0, etc.) and some characters are invalid. In the case of a multi-platform application, you may need to test OS-specific behaviors. Why don't you just write to a temporary directory? Same for a database, why don't you use a docker container to run the DB, so you can test the SQL queries and the database constraints?

Most of the time, you should reserve mocks for external services. The ones that are not under your control. Indeed, why do you want to make your application and tests more complex by adding abstractions and using mocks when you can use the actual dependencies? Also, you should consider which parts of the application are not tested because all tests use mocks.

You should consider using the actual services when possible. Of course, you still want the tests to be isolated. There are lots of strategies here. For instance, if you need to read or write files, you can create a temporary directory for each test. If you start a database using a docker container, you can use different database names to isolate tests. You can also rely on emulators provided by the cloud providers to run some dependencies locally. This way, you are sure that the code is working as expected and you don't need to write complex mocks. Make sure your application can be configured, so you can easily provide different connexions strings, or the root folder for the application data.

Useful tools to avoid mocks:

- Starting docker containers of the services you need to test: TestContainers or .NET Aspire can be useful. If starting a docker container is slow, you can reuse it between multiple test runs. Note that .NET Aspire can also provision resources in the Cloud (Azure, AWS, etc.).

- Using emulators provided by the service provider. For instance, Azure provides an emulator for Azure Storage.

- Using the File System. Temporary directory can be useful to create a disposable directory for the tests.

#Mock Frameworks

Mocks can be useful to test specific scenarios that are hard to reproduce. For instance, you may want to test that your application can handle a specific error returned by a third-party API that is not easy to reproduce. In this case, you can mock the API to return the expected error.

I tend to avoid using mock frameworks. Instead, I prefer crafting test doubles manually. Test doubles can closely simulate real-world scenarios more effectively than mocking frameworks. They can retain state across multiple method calls, which can be challenging with mocking frameworks. Indeed, you may need to be sure that the mock is called in the right order (e.g. Save before Get), and that the mock is called the right number of times, which, can be hard to maintain.

Additionally, when debugging tests, you can easily step into the code with manual test doubles, whereas this isn't always feasible with mocking frameworks. By adding custom methods to the test doubles for setup or content assertion, you can enhance expressiveness, making the tests more readable and easier to maintain. These test doubles can also be reused across multiple tests.

While it might appear more labor-intensive, the effort isn't substantial. You typically don't deal with an excessive number of mock classes, and you can reuse a generic implementation across various tests. Consequently, you avoid duplicating dense code blocks for setup in each test.

When I need to simulate a specific behavior, I wrap the manually written stub with FakeItEasy and override only the methods I need to simulate the specific behavior.

C#

// Using a Mock framework to simulate a specific behavior

// What if the service calls GetAsync before SaveAsync?

var service = A.Fake<IService>();

A.CallTo(() => service.SaveAsync()).Returns(true);

A.CallTo(() => service.GetAsync()).Returns(["value"]);

var sut = new MyClass(service);

sut.DoSomething();

C#

// Using a stub written manually

var service = new StubService();

service.AddItem("value"); // Add initial data

var sut = new MyClass(service);

sut.DoSomething();

You can read the blog post Prefer test-doubles over mocking frameworks by Steve Dunn for more information.

#Should I add interfaces to be able to mock?

These interfaces with a single implementation are not useful. They just add complexity to the code. Also, it reduces the IDE capabilities. For instance, if you use an interface, you cannot use the "Go to implementation" feature directly.

If possible, you should not complexify the code just to be able to use mock. If there will be a single implementation in the code, then you may not need an interface. Also, some components are already mockable without any changes.

- Use virtual methods, so you can override the behavior in a derived class in a test

- Use a delegate (

Action to override the behavior), so you can provide a custom implementation in a test - Provide a configuration to allow the user to provide a custom implementation

HttpClient is mockable without adding an interface (Mocking an HttpClient using ASP.NET Core TestServer)

#Write more assertions in the code

While assertions in the tests are the most common way to validate the behavior of the code, you can also write assertions in the code. For instance, you can use Debug.Assert to validate the state of the application. This can be useful to validate some assumptions you have about the code. One benefit is that it will throw early. Also, these assertions run when you debug the application, not only when running tests.

These assertions can also improve the readability of the code. Indeed, you can see the expected behavior directly in the code. This can be very interesting when writing complex code or when you want to validate a specific behavior.

C#

public void DoSomething()

{

Debug.Assert(_service != null, "This should not be possible");

_service.DoSomething();

}

#Test Frameworks

Just use the one you prefer. xUnit, NUnit and MSTests are all very similar in terms of features. The syntax may be different, but the concepts are the same. For the assertions, you can use the built-in assertions or a library like FluentAssertions.

For the assertions, you can also use a library like Verify or InlineSnapshotTesting. These libraries allow you to write assertions that are readable and very expressive.

#Code coverage

Code coverage is a useful metric, but it shouldn't be your primary goal. Aiming for 100% coverage often leads to diminishing returns and several issues:

- Coverage doesn't guarantee quality: Achieving 100% coverage doesn't mean your application works correctly. Coverage is merely a number—it doesn't ensure your tests are meaningful or effective.

- Scope ambiguity: Are you measuring 100% of your application code or including all dependencies? The distinction matters significantly.

- Diminishing returns: The effort required to cover the final 10-20% of code often outweighs the benefits. This time could be better spent on other quality improvements.

Consider whether every code path requires testing. For example, testing that a method throws ArgumentNullException for null parameters rarely adds meaningful confidence—it's often just ceremony that wastes development time.

Instead, focus on achieving meaningful coverage that builds genuine confidence in your application. A coverage range of 70-80% typically strikes an excellent balance between confidence and development effort, ensuring critical paths are thoroughly tested without getting mired in trivial edge cases. However, for mission-critical components of your application, aiming for comprehensive coverage may be warranted.

Remember: tests should provide sufficient confidence in your code's behavior, not check every possible execution path.

#Static analysis

While this post is about testing, you should also use static analysis tools. These tools can help you find issues in your code before running the tests. For instance, you can use the stricter features of the compiler, Roslyn Analyzers, NDepend, etc. These tools can help you find issues like null reference exceptions, unused variables, etc.

All these tools can help to detect issues at compile time. This can reduce the number of tests you need to write.

#Test flakiness

Tests should be reliable. If a test fails, it should be because there is a bug in the code, not in the test. If a test fails randomly, you will lose confidence in the test suite. You will start to ignore the failing tests, and you will not trust the test suite anymore. Also, people will waste time retrying the tests on the CI to be able to merge their pull requests. This means you will increase the time to merge and deploy your application.

There are multiple ways to handle flaky tests:

- Fix the test. That's the best solution.

- Quarantine the test and create a task to fix it later. This way, you can still merge your pull requests and deploy your application.

- Remove the test. If the test is not useful, you can remove it.

Some CI tools can detect test flakiness. For instance, Azure DevOps can do it: Manage flaky tests

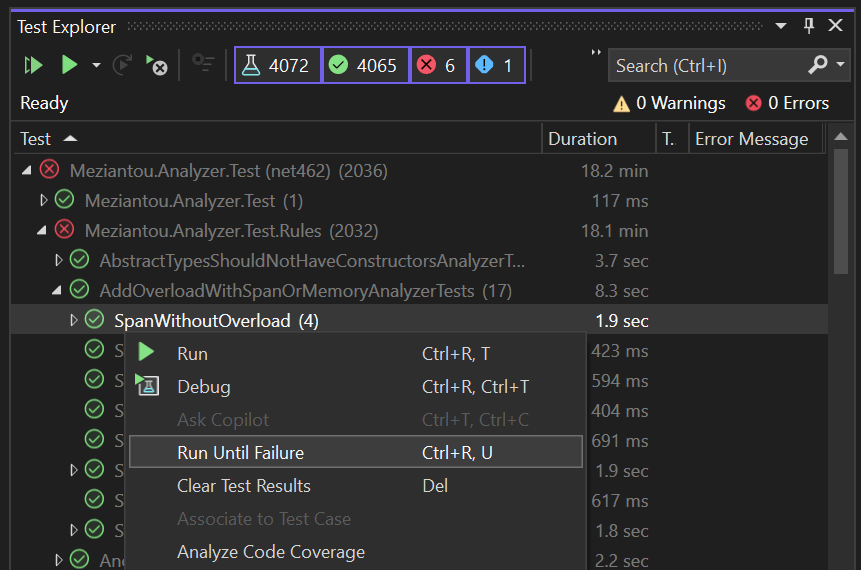

If you need to reproduce locally a flaky test, you can use Visual Studio or Rider to run the tests until it fails. This way, you can debug the test and understand why it fails.

Do you have a question or a suggestion about this post? Contact me!